Lightweight CNN based Meter Digit Recognition

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License(https://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Image processing is one of the major techniques that are used for computer vision. Nowadays, researchers are using machine learning and deep learning for the aforementioned task. In recent years, digit recognition tasks, i.e., automatic meter recognition approach using electric or water meters, have been studied several times. However, two major issues arise when we talk about previous studies: first, the use of the deep learning technique, which includes a large number of parameters that increase the computational cost and consume more power; and second, recent studies are limited to the detection of digits and not storing or providing detected digits to a database or mobile applications. This paper proposes a system that can detect the digital number of meter readings using a lightweight deep neural network (DNN) for low power consumption and send those digits to an Android mobile application in real-time to store them and make life easy. The proposed lightweight DNN is computationally inexpensive and exhibits accuracy similar to those of conventional DNNs.

Keywords:

Automatic meter reading, Lightweight Deep Neural Network, Image processing, Convolutional neural network1. INTRODUCTION

Electricity is supplied to factories, houses, and other places; and in most places, digital digit meters are installed to monitor the usage. Mostly, the digital meter reading is obtained manually where a meter reader looks at the meter and captures the details of electricity or water use. Moreover, reading meter digits and sending bills to consumers are elongated tasks with high chances of error [1]. Accomplishing the meter reading task automatically can reduce the rate of errors caused by humans. Automatic meter reading (AMR), i.e., detecting texts in images, can be implemented using a camera attached in front of the meter and can also be used for other tasks [1]. AMR can help reduce the time taken in reading bills manually. It has been addressed with the help of three steps—screen detection, digit segmentation, and digit recognition..

All these three stages are crucial, as screen detection is a significant task to crop the required screen image from the whole image of a meter. Appearance feature-based approach based on the histogram of oriented gradients (HOGs) is used for handwritten digit recognition [2]. A blob detection technique, maximally stable extremal region(MSER), is used for the extraction of character candidates for a text detection system from natural scene images [3]. Similarly, HOG is used with a support vector machine (SVM) to detect Chinese words in an image [4]. An automatic electrochemical meter reader has been proposed using HoG and SVM [1].

A major advancement in the text-detection task is gained after the introduction of deep learning techniques, especially convolutional neural network, and the main reason behind the elevation of using deep neural networks (DNNs) is the recent advancements pertaining to the amount of data. Lately, most conventional techniques used for AMR are replaced by DNNs. Deep learning algorithms have started adapting to in-text recognition tasks. Several recent studies have used large amounts of datasets for the training and evaluation purpose of their models [5,6]. Most of the previous studies are conducted based on the analog meter. Son et al. used YOLO v3 to detect the required region, as YOLO v1 does not perform well for small objects, and an architecture based on the VGG network has been designed to recognize digits [7]. Waqar et al. proposed a model based on faster R-CNN for extraction and recognition, which are divided into three parts: the first part uses a CNN using VGG architecture, the second part is a regional proposal network, and the third is the detection network [8]. However, these techniques enable detecting and recognizing readings; However, at the same time, these models are expensive and consume high power and make them almost impossible to implement on edged devices. Moreover, consumers cannot benefit from them.

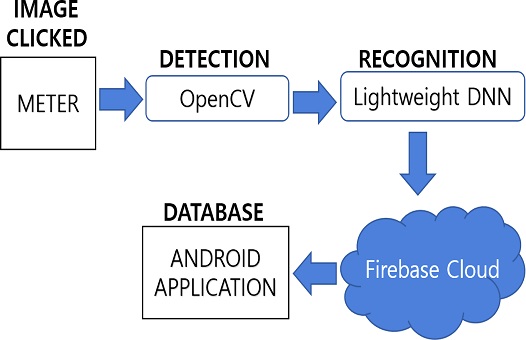

This paper focuses on performing recognition tasks and displaying extracted digits on a cell phone in real time. A camera is attached to the digital meter, and it is pre-programmed to click the picture of the meter twice a day, i.e., at 12 AM and 12 PM. After capturing the picture, pre-processing is performed to detect the required part, and then digit recognition is performed. After recognition, the recognized digits are sent to an Android mobile application, specially built to receive and store meter readings. The most significant part of the proposed system uses the lightweight model for training and validation purposes. To build lightweight models, different convolution filters are used and thoroughly explained in the next section. The workflow of the proposed system is shown in Fig. 1. The fundamental contribution of the proposed work can be summarized as follows:

• Capturing an image from a meter at a specified time and preprocessing it to extract the region of interest (detection) using OpenCV.

• Digits will be recognized using lightweight DNN that is trained using three main convolutional layers.

• Recognized digits are sent and stored in an Android mobile application using a firebase real-time database.

2. PROPOSED APPROACH

2.1 Capturing and Detection

The capturing step includes clicking a picture of the meter with an attached camera. The system is designed to automatically click pictures at specified times and send them for further processing. After clicking a picture, the basic and foremost task is to extract the region of interest, i.e., in our case, the display that contains the readings of the meter from the image. The clicked images contain redundant text that is not required for detection.

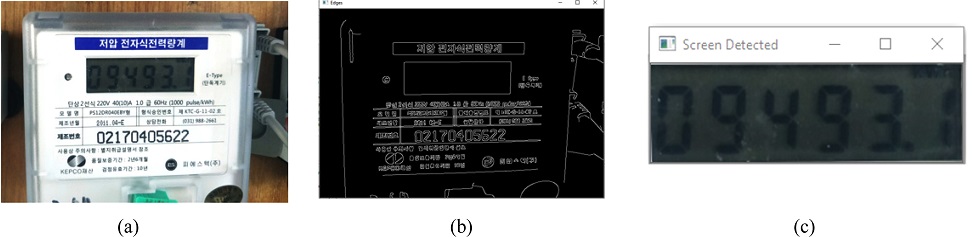

For detection, deep learning techniques are widely used in [6,8,9] previous studies, but in the proposed method, deep learning is not used for detection because we aim to make a lightweight model that is computationally cheap and efficient at the same time. This step is taken using OpenCV, which provides promising results. A good approach is needed to detect the region of interest, and for this, the below steps are followed. Fig. 2(a) depicts the captured image of the digital meter, and few preprocessing steps are implemented on it to detect the desired display.

Steps followed for the recognition purpose: (a) Captured Image, (b) Edged Image, (c) Detected Screen.

The captured image is first turned into a black and white one because OpenCV processes black and white images efficiently, and the size of an image reduces, which makes applying further processing on the image convenient, i.e., to detect the digital display from the meter. The Gaussian filter is applied to smoothen and remove noises present in the image, followed by resizing and applying Canny edge detection to obtain the edged image (Fig. 2(b)). Canny edge detection is widely used in computer vision tasks to extract structural information from a given image, as it displays only edges, which make it easier to detect the required part from any particular given image. In this case, it is used to detect the digital display, which is rectangular.

Therefore, we aim to extract only the rectangle containing digits and discard every other redundant information from the image. After extracting the edged image, another OpenCV method, known as contours, is used for detection. Contours are useful for shape analysis and can detect the desired region, surrounded by continuous curves having the same color or intensity, and extract it. Using this method, our required display is detected and extracted from the raw meter image (Fig. 2(c)).

2.2 Recognition of Digits

The recognition of digital digits is based on a lightweight DNN. Several convolutional filters have been developed in recent times. The proposed network comprises several convolution filters and resulted in a lightweight architecture. A lightweight model includes few parameters for training and thus consumes less power compared with the other conventional DNNs. Moreover, such lightweight models are easier to implement/deploy in hardware devices, e.g., mobile devices or FPGA, as they require less storage.

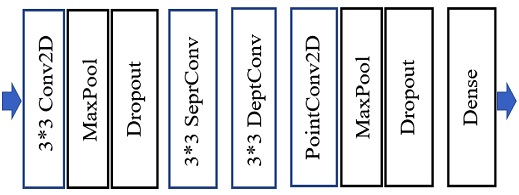

The convolutional operation has been mostly used for computer vision tasks in recent times and is best for image processing. Normal, separable, depthwise, and pointwise convolutional filters are used in the proposed method. In the convolution operation, the two steps are filtering and combining. In normal convolution, these steps are implemented simultaneously. In separable convolution, these steps were followed, but now, this operation is achieved by combining two different convolutional operations. Filtering and combining are performed using depthwise and pointwise convolutions, respectively. Depthwise convolution divides the image and filters into three channels and applies the convolution operation to them [10]. Pointwise convolution is the same as normal convolution, but it uses a filter of size 1x1 for the combining process. Using separable convolution reduces the size of the model and becomes computationally cheap [11,12]. The architecture of the proposed model is shown in Fig. 3.

The first convolution layer of the model is using normal 3 × 3 conv2D with 28 filters followed by Max Pooling and dropout. The second main convolution layer is 3 × 3 SeperableConv2D with 56 filters. The use of separable convolution leads to make the model cheaper as compared to normal CNN, and it provides similar accuracy to that of Normal CNN. For the next layer, the model has used one depthwise and one pointwise convolution layers followed by MaxPooling and Dropout. Finally, one dense layer is used as the output layer of the model.

The Softmax layer is used as the activation function. All the convolution layers including depthwise, and pointwise are followed by the activation function rectified linear units (ReLU) and BN layer. The main objective of designing a lightweight model is achieved by separating the two steps (filtering and combining) of the normal convolutional operation and using a depthwise convolution layer for filtering, and a pointwise convolution layer for combining. The comparison concerning the number of parameters used for the proposed method with the normal convolutional neural network is shown in the result section. For training, the lightweight DNN seven-segment digit dataset is used.

2.3 Android Application

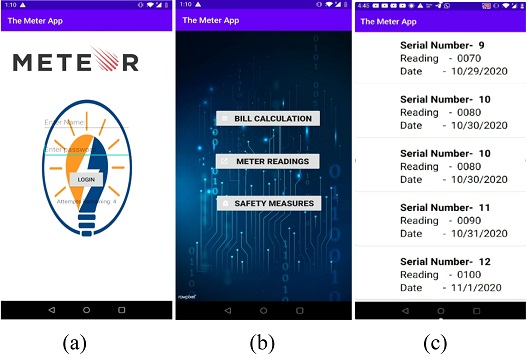

After detecting and recognizing digits from the meter now, creating some database is significant to store these extracted readings to make the system user-friendly. For this, an Android application is developed using Android studio, and the detected digits will be transferred and stored to the application in real time. The android application contains four main pages.

The first page displays the user account log-in with user-specific credentials. The second page gives information on how monthly electricity is calculated. This page specifically tells you about the electricity-bill-calculating procedure of South Korea with all taxes and details. The third page is for storing and showing all the meter readings. All these readings are stored with serial numbers and dates. A consumer can see his/her previous usage information, as this application stores old readings with dates that can be useful for comparing the current readings for the current date with the same date of the previous month and makes it easy to manage further usage.

Moreover, the connectivity between the system and Android application is a significant issue. Bluetooth and Wi-Fi are used primarily as data-exchange entities, as they give cogent results, but we cannot use any of these, as they are distance-dependent. To ensure connectivity between the system and Android application to transfer digits in real time, Firebase real-time database, which is not distance-dependent, is used. It is a cloud-hosted database that is synchronized in real time with the connected application, and the enhancement is done within milliseconds. It is receptive even at the time when the device is not connected to the Internet. In this situation, it waits for the device to re-establish the connectivity, and as the connection is re-established, the database automatically synchronizes with the application considering the current state of the servers. Therefore, for connectivity purposes, the Firebase real-time database should be preferred.

After detecting and recognizing the digits from the meter now it is significant to make some database to store these extracted readings to make the system user-friendly. For this purpose, an Android application is built. The application is built using android studio and the detected digits will be transferred and stored to the application in real-time.

The overview of the Android application is shown in Fig. 4 where 4(a) shows the front page, 4(b) shows the things offered by the application, and 4(c) shows the meter readings with the specific date.

3. RESULTS

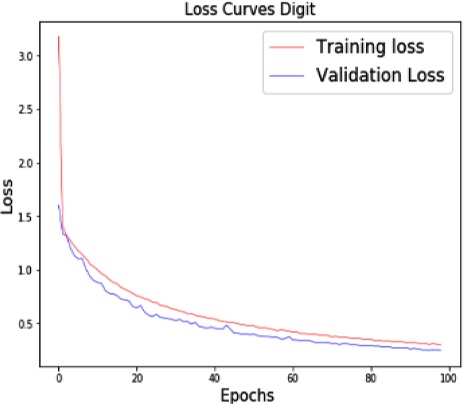

This section shows the experimental result of the proposed model. Training and testing are performed with the digital digit dataset. A total of 41,607 images of digital digits are used where the dimension of the image is changed to 28*28 to ease the training process. Hyperparameters play an important role for a model to achieve high accuracy and low loss percentage. Several learning rates are applied to find the optimal result. Finally, 0.01 is selected as the initial learning rate for the training, as it gives the best results among all others. Stochastic gradient descent is one of the frequently used optimizers for DNN, but Adam optimizers resulted in providing better results. L2 regularizer is used with convolutional layers. The model is trained for 99 epochs. The loss graph of the lightweight training model is shown in Fig. 5. For performance analysis, the proposed model is compared with the conventional four-layered convolution model as shown in Table 1. The comparative analysis shows that the proposed network has used a few parameters, resulting in 2% lesser accuracy than the four-layered CNN architecture. Therefore, the lightweight model should be preferred.

Moreover, the proposed model is compared with the previous models used for different tasks pertaining to AMR as shown in Table 2. YOLO V3 and Fast-YOLO are used for the detection step in Ref. [7] and [9], respectively, where they use YOLO(a deep learning detection model that requires prior training) as a base model, and it is computationally expensive because it consumes a lot of power in training. Therefore, in the proposed work for the detection step, OpenCv is used not to require deep learning or prior training.

VGG-based CNN and CRNN are used for the recognition step in Reference [7] and [9], respectively, where they require large parameters to train their respective models and to make the model computationally expensive. Therefore, the proposed model uses a small network that requires only 186,471 parameters to train the recognition model.

4. CONCLUSIONS

This paper proposes a real-time AMR and storage system. In the current scenario, reading meter digits manually and generating bills are inefficient. The proposed system can result in overcoming this issue and help view details about the daily used quantity of electricity or water in mobile phones. This can make human lives easy, and users do not have to wait till the end of the month to know about the usage. The proposed system uses OpenCV techniques to read digits and uses the lightweight DNN architecture for recognition, which consumes less power. This system overcomes the issue of storing the extracted digits using Android applications.

Using this method, users can manage their electricity or water usage as well. The experimental results validate the system as an effective and lightweight deep learning model for digital digit recognition task/AMR, which can be deployed on edge devices.

Acknowledgments

This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (2020-0-01080, Variable-precision deep learning processor technology for high-speed multiple object tracking).

REFERENCES

-

D. B. P. Quintanilha et al., “Automatic consumption reading on electromechanical meters using HoG and SVM,” 7th Latin American Conference on Networked and Electronic Media (LACNEM), Valparaiso, pp. 57-61, 2017.

[https://doi.org/10.1049/ic.2017.0036]

-

R. Ebrahimzadeh, M. Jampour, “Efficient Handwritten Digit Recognition based on Histogram of Oriented Gradients and SVM”, Int. J. of Comput. Appl., Vol 104, pp. 10-13, Oct. 2014.

[https://doi.org/10.5120/18229-9167]

- U. B. Karanje, R. Dagade, S. Shiravale, “Maximally Stable Extremal Region Approach for Accurate Text Detection in Natural Scene Images”, Int. J of Sci. Develop. and Res., Vol. 1, Issue 11, Nov. 2016.

- B. Yu and H. Wan, “Chinese Text Detection and Recognition in Natural Scene Using HOG and SVM”, 6th International Conference on Information Technology for Manufacturing Systems, 2017.

-

I. Gallo, A. Zamberletti, L. Noce, “Robust Angle Invariant GAS meter reading”, International Conference on Digital Image Computing: Techniques and Applications, Oct. 2015.

[https://doi.org/10.1109/DICTA.2015.7371300]

-

M. Cerman, G. Shalunts, D. Albertini. “A Mobile Recognition System for Analog Energy Meter Scanning”. Advances in Visual Computing. ISVC Springer, Cham. Lecture Notes in Computer Science, Vol. 10072, pp. 247-256, 2016.

[https://doi.org/10.1007/978-3-319-50835-1_23]

-

C. Son, S. Park, J. Lee, J. Paik, “Deep Learning–based Number Detection and Recognition for Gas Meter Reading”. IEIE Trans. on Smart Processing & Computing, Vol. 8, No. 5, pp. 367-372, Oct. 2019.

[https://doi.org/10.5573/IEIESPC.2019.8.5.367]

-

M. Waqar, M. A. Waris, E. Rashid, N. Nida, S. Nawaz and M. H. Yousaf, “Meter Digit Recognition Via Faster R-CNN”. International Conference on Robotics and Automation in Industry (ICRAI), Rawalpindi, Pakistan, pp. 1-5, Mar. 2019.

[https://doi.org/10.1109/ICRAI47710.2019.8967357]

-

R. Laroca, V. Barroso, M. A. Diniz, G. R. Gonçalves, W. R. Schwartz, D. Menotti, “Convolutional neural networks for automatic meter reading,” J. Electron. Imag. Vol. 28, No. 1, pp. 1-38, 5 Feb. 2019.

[https://doi.org/10.1117/1.JEI.28.1.013023]

- A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto, and H. Adam. “Mobilenets: Efficient convolutional neural networks for mobile vision applications”. Computer Vision and Pattern Recognition (CVPR), Vol. 1, pp. 1-9, Apr.2017.

-

F. Chollet, “Xception: Deep learning with depthwise separable convolutions”. Computer Vision and Pattern Recognition (CVPR), Vol. 3, pp. 1-8, Apr. 2017.

[https://doi.org/10.1109/CVPR.2017.195]

-

M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, L. Chen, “MobileNetV2: Inverted Residuals and Linear Bottlenecks”. Computer Vision and Pattern Recognition (CVPR), Vol. 4, pp. 1-14, Mar. 2019.

[https://doi.org/10.1109/CVPR.2018.00474]