A Multi-Sensor Fire Detection Method based on Trend Predictive BiLSTM Networks

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License(https://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Artificial intelligence techniques have improved fire-detection methods; however, false alarms still occur. Conventional methods detect fires using current sensors, which can lead to detection errors due to temporary environmental changes or noise. Thus, fire-detection methods must include a trend analysis of past information. We propose a deep-learning-based fire detection method using multi-sensor data and Kendall's tau. The proposed system used a BiLSTM model to predict fires using pre-processed multi-sensor data and extracted trend information. Kendall's tau indicates the trend of a time-series data as a score; therefore, it is easy to obtain a target pattern. The experimental results showed that the proposed system with trend values recorded an accuracy of 99.93% for BiLSTM and GRU models in a 20-tap moving average filter and 40% fire threshold. Thus, the proposed trend approach is more accurate than that of conventional approaches.

Keywords:

Kendall’s tau, Multi sensors, Fire detection, Trend predictive, Deep learning, BiLSTM1. INTRODUCTION

To reduce the false-alarm rate in fire detection, it is important to understand the interactions that occur during a fire and to discover the patterns and characteristics necessary for identifying fires. Recent research on fire detection has utilized machine learning and pattern recognition techniques to achieve robust and reliable detection. Sarwar et al. [1] predicted fire probability using adaptive neuro-fuzzy inference. Han et al. [2] used an array of eight MOX sensors to map time-series data onto pseudo-image-matrix data and then used convolutional neural networks to classify mixed gases. Saponara et al. [3] proposed a real-time video-based fire and smoke detection method using YOLO and CNN. However, these methods have slow response times because of the difficulty in capturing clear images of smoke or flames during the early stages of fire ignition [4]. Gas-sensor-based fire detection methods are useful for identifying fires in their early stages because they measure the gases generated when ignition occurs. However, gas-sensor-based machine learning methods exhibit significant variations in false-alarm rates depending on the input data. Detection accuracy can be influenced by the quality, type, and number of sensors used. Various studies have proposed different approaches to improve accuracy using multi-sensor data. Yan et al. [5] normalized their data based on the correlations between sensors, whereas Freeman et al. [6] enhanced the predictive performance by adaptively inputting sensor data features into deep learning models. These approaches effectively manage data variability, resulting in high detection accuracy [7]. Following this research trend, research on multi-sensor fire detection has been proposed that utilizes not only sensor data but also trend analysis. Unlike conventional methods that consider only the current values of data, this approach establishes a relationship between fire detection and sensor data trends. Nakip et al. [8] evaluated the performance of rTPNN-based models for trend prediction using multivariate time-series data generated by multi-sensor detectors. Wu et al. [9] extracted trends from temperature, smoke, and CO sensors and predicted fire occurrence based on a backpropagation neural network. Such trend-based fire detection is robust to transient changes in sensor data and provides a significant advantage [10]. However, these studies have pointed out that predictive performance may vary depending on the trend value extraction and multi-sensor data fusion methods. Therefore, further research is required to determine effective trend analysis and sensor data integration methods.

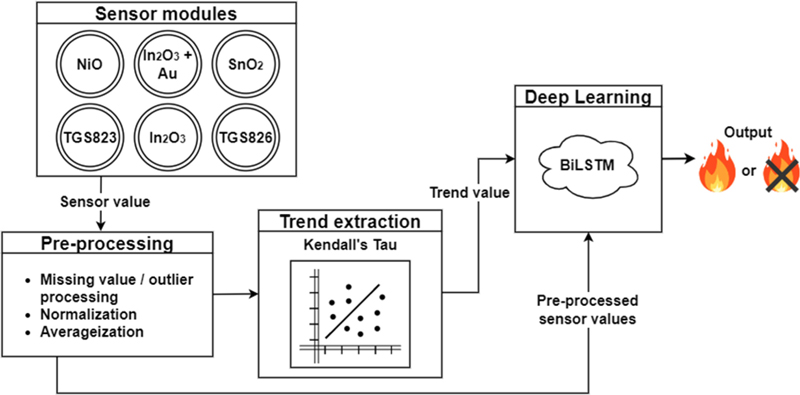

We propose the fire detection method shown in Fig. 1 that fuses multi-sensor data and trend values based on Kendall’s tau algorithm into BiLSTM models. This method predicts the occurrence of fire based on the values of six gas sensors and their trend values under various conditions. Trend values were extracted using the modified Kendall's tau, which is a statistical method for measuring the rank correlation between two variables. The proposed detection method performs fire prediction using a deep learning model, BiLSTM, by inputting sensor and trend values. The BiLSTM model provides more accurate predictions by processing time-series data and considering temporal relationships.

2. EXPERIMENTAL

2.1 Proposed Fire Detection System

The proposed fire-detection system comprises five main stages: data collection, pre-processing, trend-value extraction, data augmentation, and fire prediction. In the data collection stage, fire-related physicochemical data were collected from a multi-sensor module that included smoke and semiconductor oxide-gas sensors. During the pre-processing stage, various pre-processing tasks such as outlier/missing data removal, normalization, and averaging were performed on the collected sensor data. The trend value extraction stage involves applying Kendall’s tau algorithm to calculate the trend values from the pre-processed sensor data. In the data augmentation stage, a sliding window algorithm is applied to the sensor data and trend values to increase the amount of data. During the fire prediction stage, the augmented sensor data and trend values are fed into the BiLSTM model to predict the occurrence of fire. Through this entire system pipeline, multi-sensor data and trend information are organically integrated and utilized for fire prediction. The pre-processing and augmentation of data improve the quality of the model inputs, and the capability of BiLSTM to model time-series data makes it easier to capture fire-related temporal patterns.

2.2 Fire Dataset

The experiment data used were based on the dataset obtained by Kim et al. [10]. This research utilized data from NiO, In2O3+Au, SnO2, and In2O3 gas sensors and TGS823 and TGS826 commercial gas sensors when heated at various indoor temperatures as shown in Table 1. Details regarding the training set environment, processing, and trend extraction are discussed in subsequent sections.

The dataset experiment was conducted by heating 5g PVC cable at 50, 100, 200, and 350oC. Initially, non-fire-state data were collected, followed by the collection of fire-state data at a specific heating point [10]. We used data from six oxide semiconductor gas sensors for the model prediction. This dataset based on sensor measurements collected under four temperatures demonstrates the strength of fire detection across various temperature environments. However, the experiments for the dataset were limited to results obtained over a relatively short period. Therefore, to apply this method to real-world scenarios, it is necessary to supplement the findings with sensor data observed over a longer duration.

First, the raw resistance values, which initially existed as simple log data, were transformed into structured data. Unlike most sensors in which the resistance decreases upon heating, an NiO sensor exhibits an upward curve when reacting with a target gas. To facilitate data comparison, the inverse of the NiO sensor values was used:

| (1) |

where Rinv represents the inverted NiO sensor value and RNiO is the original NiO sensor value.

Subsequently, normalization was performed to reduce the differences in resistance values between the sensors.

| (2) |

where Rnorm represents the normalized sensor resistance value, R is the original sensor resistance value, and Rmin and Rmax denote the minimum and maximum sensor resistances, respectively.

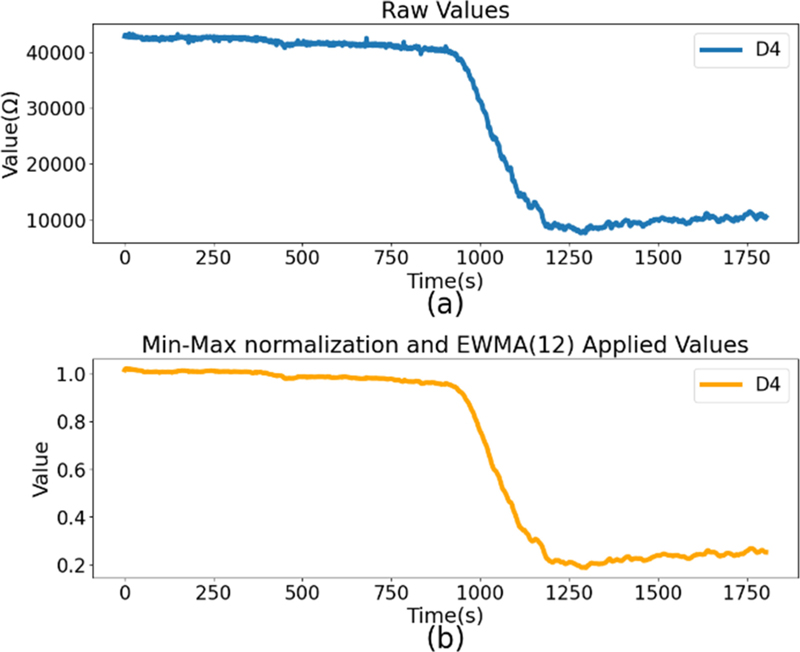

Max-min normalization is a scaling method that adjusts the range of data to [0,1] or [-1,1]. This method is straightforward to implement and can be scaled precisely over an entire data range. However, it is sensitive to outliers that can extend or shrink the overall data range and potentially distort the data distribution. Therefore, in this study, each sensor resistance value was divided by the average value of the sensor data in the non-fire state before heating to preserve the original data flow while reducing the differences in resistance values between the sensors. Fig. 2 shows the sensor graphs obtained after applying the described data-scaling method. The scaled data were smoothed using an exponentially weighted moving average (EWMA). The tap size was determined to be 12, considering the periodicity of the data and level of noise. The EWMA function assigns greater weights to more recent data, reduces noise, and creates a smoother trend, thus yielding more reliable data. This method is well-suited for real-time data processing because it can be efficiently updated as new data arrive. In this study, normalized and averaged data were used to extract data from 300 s before ignition to 700 s after ignition, which were then divided into smaller time intervals. This interval represents a critical period in the transition from a non-fire to a fire state, and the range was segmented for the experiment to enable rapid detection.

Kendall's tau is a statistical method used to measure the rank correlation between two variables. By applying Kendall's tau trend algorithm to the sensor data, it was possible to identify rank correlations among the observed resistance values of the sensor data. The trends in the data included both the magnitude and direction of the changes. Although fire parameters vary when different substances are combusted, the direction of change tends to be similar during the early stages of a fire. The Kendall rank correlation coefficient is typically defined in statistics as follows. When given paired data between two variables X and Y: for any two pairs (Xi, Yi) and (Xj, Yj), if Xi < Xj and Yi < Yj or Xi > Xj and Yi > Yj, then the pair is concordant (+1). If Xi < Xj and Yi > Yj or Xi > Xj and Yi < Yj, then the pair is discordant (-1). In other words, when X and Y increase, the pair is considered concordant, whereas when X increases and Y decreases, the pair is considered discordant [11]. Kendall's tau coefficient is simplified as

| (3) |

where the denominator represents the total number of pairs (xi,yi) and (xj,yj). Thus, Equation 3 indicates the ratio of concordant pairs to discordant pairs. Since the denominator is the total number of pair combinations, the coefficient must fall within the range −1 ≤ τ ≤ 1. If the rankings between two variables are in perfect agreement, the coefficient will have a value of 1; if they are in perfect disagreement, the coefficient will have a value of –1. In this study, the direction of change for each sensor’s resistance was individually extracted and represented as a trend value, which was then used as an input parameter for the model. The Kendall's tau trend algorithm was modified according to the recursive formula [9]. In Equation 4, y(n) represents the trend value calculated by the Kendall's tau trend algorithm.

| (4) |

In Equation 5, u(x) represents the unit step function, which returns 1 when the input value is greater than or equal to 0 and 0 when the input value is less than 0. The overall structure of the double summation calculates the trend for all possible time differences within the data and accumulates the results. The calculated y(n) represents the trend of the data at a given time step, allowing for the analysis of changes according to the order of the data.

| (5) |

To better detect the rising and falling trends of fire signals, the Kendall's tau algorithm can be modified by replacing the unit step function u(x) with the signum function sgn(x) and omitting the u(x) term as shown in Equation 6. This modification reduces the computational complexity compared to the original approach.

| (6) |

The final modification involves updating the trend value y(n) at the current time step n using Equation 7, which incorporates information from the trend value at the previous time step y(n−1), as well as the sum of the sign functions for the current data x(n) and the previous values x(n−i) within a range of N. This sum reflects how the trend value at the current time step is updated based on both the previous trend value and the changes in data at the current time step.

| (7) |

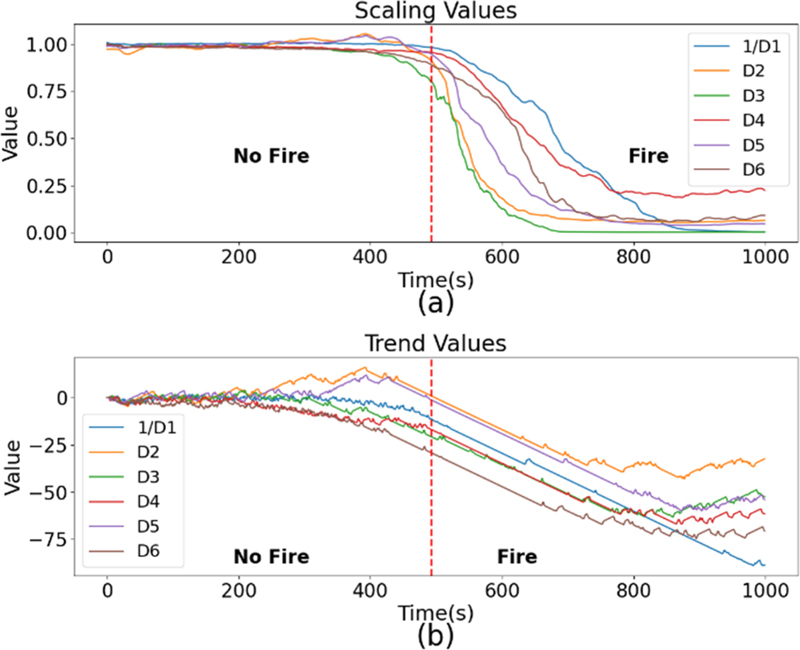

The data, which were separated around the ignition point after undergoing normalization and averaging, were processed to extract the direction of the fire parameters as trend values using the modified Kendall’s tau trend algorithm expressed in Equation 7. This trend was calculated in a normalized form to construct the dataset. After conducting several experiments, the window tap size for the observational data was determined to be optimal at 4, indicating that the current data and previous four data points were calculated as one set. Fig. 3 shows the Kendall's tau trend values extracted using a window tap size of 12. These trend values exhibited faster changes in the early stage of a fire compared with those of standard sensor readings. This indicates that even when using conventional fire detection thresholds, the detection time can be effectively reduced. This characteristic allows for the identification of fire signs in a shorter period, serving as a crucial indicator that can significantly enhance fire detection accuracy.

Labeling is the process of assigning a specific class or category to each data point and is essential for supervised learning. Here, both primary and secondary labeling were performed to designate the fire and non-fire classes. In the primary labeling stage, classes were assigned based on the sensor resistance values. The D3 sensor, which had the fastest response and most significant change in resistance, was used as a reference. If the resistance value of sensor D3 fell below a certain threshold, the data were labeled as fire (Class 1); otherwise, they were labeled as non-fire (Class 0). In the secondary labeling stage, the data labeled in the primary stage were redefined using a sliding window algorithm. The sliding window method divides time-series data into small window units to generate continuous subsets, allowing for the capture and analysis of dynamic changes in the time-series data. The sensor data, trend values, and primary label values were slid using window tap sizes of 10, 15, and 20, respectively. If the proportion of fire data within a window exceeded predefined fire thresholds of 40% and 80%, the window was relabeled as fire (Class 1). This approach augmented the data while preserving the temporal structure of the time-series data and defined the final training data labels based on the fire proportion.

2.3 Training Model

The labeled dataset was divided into two types for model training depending on whether trend values were included. One type used only sensor data as input, whereas the other used both sensor data and trend values extracted using Kendall's tau algorithm. Three types of RNN models were employed for timeseries data modeling: LSTM, BiLSTM, and GRU. LSTM is designed to effectively learn long-term dependencies by addressing the vanishing or exploding gradient problems inherent in traditional RNNs. BiLSTM enhances the ability to capture the overall context by incorporating a bidirectional structure that utilizes both past and future contextual information. The GRU is a simplified version of the LSTM that uses only reset and update gates, thereby reducing computational complexity. These models are highly capable of remembering past information and relating it to current data, making them effective for capturing complex patterns of temporal phenomena such as the occurrence of fire. Given the importance of utilizing future information for fire prediction, the BiLSTM, which considers both past and future contexts, was selected as the final training model. For a comprehensive performance analysis, LSTM and GRU models from the same RNN family were implemented and compared. For training, the data were divided into training, validation, and test sets in a 6:2:2 ratio. The data were transformed into a 3-dimensional format suitable for RNN input, and the model was optimized through a hidden-layer configuration and hyperparameter tuning. A single-node output layer with a sigmoid activation function was used to determine the occurrence of fires. The loss function was binary cross-entropy, and the Adam optimizer was used.

Through this model-training process, an effective fusion of multi-sensor data and trend values was achieved, resulting in the development of a fire-prediction model. By utilizing the recurrent neural network structure, which is advantageous for modeling time-series patterns, and the ability of BiLSTM to leverage future information, a high fire-prediction performance was anticipated.

3. RESULTS AND DISCUSSIONS

Various experiments were conducted to evaluate and compare the performance of the proposed fire-prediction system. The fireprediction accuracies of the LSTM, BiLSTM, and GRU models were measured under different conditions such as the inclusion of trend values, data window tap size, and fire threshold. The experimental results indicate that the BiLSTM model generally achieved the highest accuracy. This was attributed to the ability of the BiLSTM to utilize both past and future contextual information, which is crucial for fire occurrence prediction, as future data play an important role. Consequently, BiLSTM, which considers bidirectional information, outperformed the unidirectional LSTM. The GRU model also recorded accuracy levels similar to those of BiLSTM. This similarity in performance can be explained by the fact that the trend values extracted using Kendall's tau algorithm summarized critical future information, thereby reducing the relative advantage of BiLSTM's bidirectional capabilities. The most noteworthy result was the improvement in accuracy when trend values were included as inputs. Cases in which sensor data were augmented with trend values consistently recorded a higher fire-prediction accuracy than cases in which only sensor data were used. Notably, both the BiLSTM and GRU models achieved a peak accuracy of 99.93% with a window tap size of 20 and a fire threshold of 40%. In the case of conventional methods that consider only current values, both the BiLSTM and GRU models recorded an accuracy of 99.90% with the same window size and fire ratio, which was slightly lower than when the trend values were included. Similarly, for the LSTM model, an accuracy of 99.08% was achieved when trend values were included, compared with 98.94% when they were not included. This result shows a 0.17% reduction in the error rate compared with the LSTM model [10], which predicted fires using eight gas sensors. The accuracy improved even though the number of sensors used in the experiment was reduced by two. These results demonstrate the effectiveness of the proposed approach in enhancing fire-detection performance by integrating multi-sensor data and trend information and applying recurrent neural network models. The use of trend values effectively captured temporal change patterns in the data, and deep learning models specializing in time-series modeling, such as BiLSTM, were able to effectively learn the complex temporal dependencies associated with fire events. Ultimately, the synergy between multidimensional data fusion, trend analysis, and deep learning techniques led to a significant improvement in fire-prediction accuracy. The proposed method was based on sensor data measured at various temperatures, providing the advantage of identifying fires under various temperature conditions. The results were supplemented using data augmentation techniques because of the short duration of the data collection. However, if sensor performance deteriorates, as in the case of a long-term drift, the accuracy of the training model may decrease significantly. Further research is required to address this issue.

4. CONCLUSIONS

We proposed a fire detection method that used multi-sensor fusion and trend information. This method predicted fire occurrence by inputting the data collected from six types of gas sensors and trend values extracted using Kendall's tau algorithm into a BiLSTM model. To overcome the limitations of traditional fire detection systems that rely solely on single-sensor data or instantaneous data values, we utilized data collected from a multi-sensor module. Even if individual sensors are sensitive to specific environmental variables, a multi-sensor system can enhance the overall data quality, thereby contributing to an improved detection accuracy. Kendall's tau algorithm enables the identification of rank correlations between observed values in time series data, representing both the magnitude and direction of change as a trend value. This capability allowed us to effectively capture the trend patterns of gases that emerged during fire events. By inputting the extracted trend values and sensor data into the BiLSTM model, fire prediction was performed, and the BiLSTM demonstrated superior performance compared with the LSTM model. The GRU model achieved a level of accuracy similar to that of the BiLSTM. The performance of the proposed system was validated through various experiments. It was observed that including trend values with the sensor data resulted in higher fire-prediction accuracy than that of using sensor data alone. Both the BiLSTM and GRU models achieved the highest accuracy of 99.93% with a window tap size of 20 and a fire threshold of 40%. In conclusion, the proposed BiLSTM-based fire-prediction method that integrated multi-sensor data with trend information was experimentally proven to offer improved fire detection performance compared to that of existing methods. This approach is expected to minimize the loss of life and property caused by fires.

Acknowledgments

This research was funded by a 2024 research grant from Sangmyung University (2024-A000-0295).

References

-

B. Sarwar, I. S. Bajwa, N. Jamil, S. Ramzan, and N. Sarwar, “An intelligent fire warning application using IoT and an adaptive neuro-fuzzy inference system”, Sens., Vol. 19, No. 14, pp. 3150(1)-3150(18), 2019.

[https://doi.org/10.3390/s19143150]

-

L. Han, C. Yu, K. Xiao, and X. Zhao, “A new method of mixed gas identification based on a convolutional neural network for time series classification”, Sens., Vol. 19, No. 9, pp. 1960(1)-1960(23), 2019.

[https://doi.org/10.3390/s19091960]

-

S. Saponara, A. Elhanashi, and A. Gagliardi, “Real-time video fire/smoke detection based on CNN in antifire surveillance systems”, J. Real-Time Image Process., Vol. 18, pp. 889-900, 2021.

[https://doi.org/10.1007/s11554-020-01044-0]

-

P. Li and W. Zhao, “Image fire detection algorithms based on convolutional neural networks”, Case Stud. Therm. Eng., Vol. 19, p. 100625, 2020.

[https://doi.org/10.1016/j.csite.2020.100625]

-

K. Yan and D. Zhang, “Feature selection and analysis on correlated gas sensor data with recursive feature elimination,” Sens. Actuators B Chem., Vol. 212, pp. 353-363, 2015.

[https://doi.org/10.1016/j.snb.2015.02.025]

-

B. S. Freeman, G. Taylor, B. Gharabaghi, and J. Thé, “Forecasting air quality time series using deep learning,” J. Air Waste Manag. Assoc., Vol. 68, No. 8, pp. 866-886, 2018.

[https://doi.org/10.1080/10962247.2018.1459956]

-

L. Xiong, J. An, Y. Hou, C. Hu, H. Wang, Y. Chen, and X. Tang, “Improved support vector regression recursive feature elimination based on intragroup representative feature sampling (IRFS-SVR-RFE) for processing correlated gas sensor data,” Sens. Actuators B Chem., Vol. 419, p. 136395, 2024.

[https://doi.org/10.1016/j.snb.2024.136395]

-

M. Nakip, C. Güzelíş, and O. Yildiz, “Recurrent trend predictive neural network for multi-sensor fire detection”, IEEE Access, Vol. 9, pp. 84204-84216, 2021.

[https://doi.org/10.1109/ACCESS.2021.3087736]

-

L. Wu, L. Chen, and X. Hao, “Multi-sensor data fusion algorithm for indoor fire early warning based on BP neural network”, Inf., Vol. 12, No. 2, pp. 59(1)-59(12), 2021.

[https://doi.org/10.3390/info12020059]

- S. Kim and K. Lee, “LSTM-based early fire detection system using small amount data”, J. Semicond. Display Technol., Vol. 23, No. 1, pp. 110-116, 2024.

-

W. Xu, H. Lai, J. Dai, and Y. Zhou, “Spectrum sensing for cognitive radio based on Kendall's tau in the presence of non-Gaussian impulsive noise”, Digit. Signal Process., Vol. 123, p. 103443, 2022.

[https://doi.org/10.1016/j.dsp.2022.103443]