Development and Implementation of a YOLOv5-based Adhesive Application Defect Detection Algorithm

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License(https://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

This study investigated the use of YOLOv5 for defect detection in transparent adhesives, comparing two distinct training methods: one without preprocessing and another incorporating edge operator preprocessing. In the first approach, the original color images were labeled in various ways and trained without transformation. This method failed to distinguish between the original images with properly applied adhesive and those exhibiting adhesive application defects. An analysis of the factors contributing to the reduced learning performance was conducted using histogram comparison and template matching, with performance validated by maximum similarity measurements, quantified by the Intersection over Union values. Conversely, the preprocessing method involved transforming the original images using edge operators before training. The experiments confirmed that the Canny Edge Detection operator was particularly effective for detecting adhesive application defects and proved most suitable for real-time defect detection.

Keywords:

YOLOv5, Transparent adhesive, Template matching, Histogram, Canny edge detection1. INTRODUCTION

1.1 Adhesive Application Issues

Defect detection through real-time inspection in adhesive-application robots is crucial, particularly because adhesive behavior can vary based on material properties and environmental conditions. Excessive adhesive application can result in overflow and visible defects whereas insufficient or improper adhesive application can weaken the bonded surface, resulting in bond failure.

Recent advancements in automation have facilitated defect detection after functional inspections in several manufacturing scenarios. However, the detection of transparent adhesive defects using conventional vision algorithms and general methods have been challenging.

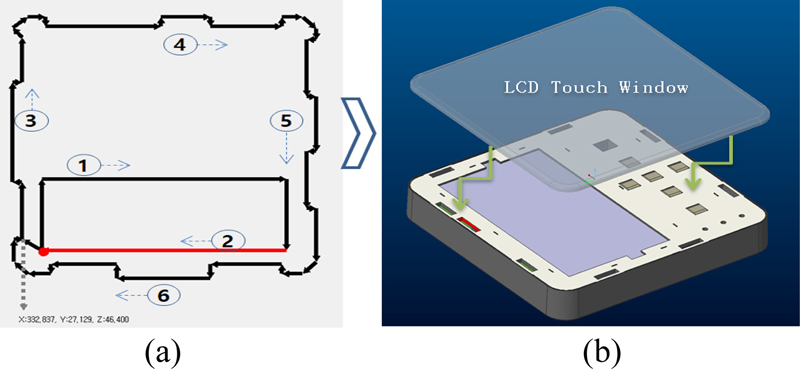

1.2 Experimental Setup

This study employed YOLOv5 for the detection of transparent adhesive application defects and quantify the similarity between the detected objects and the original images using the IoU (Intersection over Union) metric for images with properly applied adhesive, the similarity measurement yielded high values with respect to the original image, whereas for images with insufficient or no adhesive application, the similarity measurement yielded low values. The remainder of this paper is structured as follows. Section 2 discussed image processing techniques and the application of YOLOv5 in related technologies. Section 3 details the experimental setup, comparing the performance of two training methods: without preprocessing and with edge operator preprocessing. Section 4 presents the analysis and results of the experiment based on the learning results.

2. RELATED RESEARCH

Image processing technology is widely used across diverse fields, including manufacturing, construction, and everyday life. Notable examples include implementation of a tire wear diagnosis system [1], real-time pedestrian detection using HOG with regions of interest [2], and concrete crack detection employing deep learning and image processing [3]. Recent studies have explored automatic pothole detection methods [4] and corrosion detection on steel plates using image segmentation [5]. In the domain of object detection, various techniques, such as template matching and histogram comparison, have been employed to develop algorithm for the detection of abnormal RBCs using YOLOv5 [6], analysis of feature maps in neural networks for vacant parking space detection [7], and implementation of an enhanced rectangle template matching algorithm for feature point matching [8].

Other applications of the adhesive coating technology include defect detection in insulation layers using improved particle swarm optimization in ECT [9], optimization of mechanical strength and adhesive defect detection in joints containing boron nitride [10], and feature map-based model compression in deep learning [11]. Other studies have focused on the development of image-based height measurement applications for children using computer vision [12], intelligent missing person indexing systems using OpenCV image processing and TensorFlow deep learning [13], OpenCV-based finger recognition systems with binary processing and histogram graphs [14], automotive adhesive defect detection using YOLOv8 [15], implementation of low-cost contact pressure analysis systems based on OpenCV [16], and AlmondNet-20 for automated almond image annotation using an improved CNN-based model [17].

3. EXPERIMENTAL

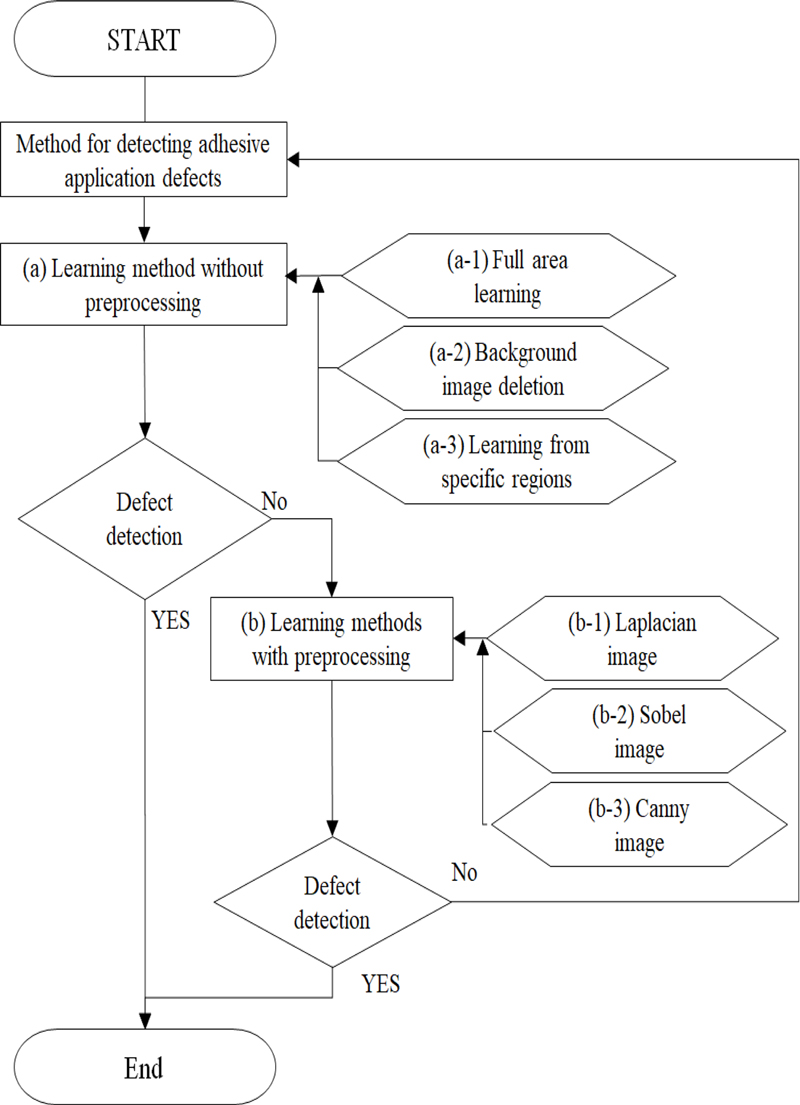

3.1 Method for Detecting Adhesive Application Defects

To detect defects in transparent adhesive applications, experiments were conducted using YOLOv5, with the learning process divided into two categories: three learning methods without preprocessing and three learning methods with edgeoperator preprocessing, as shown in Fig. 3. In the learning method without preprocessing, (a-1) the entire area outside the LCD window, including the transparent adhesive, was used for learning, (a-2) the learning focused on the adhesive area by removing unnecessary background images using a polygon tool, and (a-3) only the portion where the adhesive was applied was designated as learning area. For the learning method with edge operator preprocessing, three techniques were applied, (b-1) Laplacian image transformation, (b-2) Sobel image transformation, and (b-3) Canny image transformation. We experimentally verified whether both methods can detect transparent adhesive application defects.

3.2 PC Specification

The specifications of the PCs used in this experiment are listed in Table 1 below. The image datasets for learning were collected from the RoboFlow website, and YOLOv5 learning was conducted using the Google Colab website.

The YOLOv5 training algorithm proceeds in a stepwise manner. It divides the input image, corresponding to the sample in which the adhesive solution has been applied correctly, into S × S grid cells. If a detected object is present within a grid cell containing the center point, the cell predicts “b” bounding boxes to detect that object. Each bounding box estimates the probability that an object is present within it, represented as a confidence score between 0 and 1. This score is calculated as the product of the confidence score and the IoU value between the predicted and detected boxes.

Each grid cell generates “b” bounding boxes; however, neighboring cells may produce additional bounding boxes predicting the same object. To address this issue, the confidence scores and IoU values were calculated, and only the bounding box with the highest confidence score is retained IoU is an evaluation metric that measures the model accuracy, representing the ratio of the intersection to the union of the predicted and actual bounding boxes. A higher IoU score indicates more intersection between the predicted and ground truth bounding boxes. The defect images used for training were processed using the RoboFlow platform, with the data set consisting of 200 – 300 image samples per defect type. YOLOv5 training was conducted on the Google Colab platform, with 1,000 iterations for each training session.

3.3 Training method without preprocessing

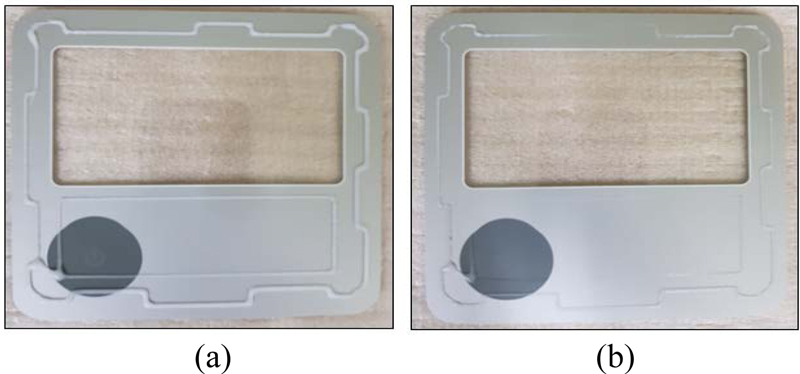

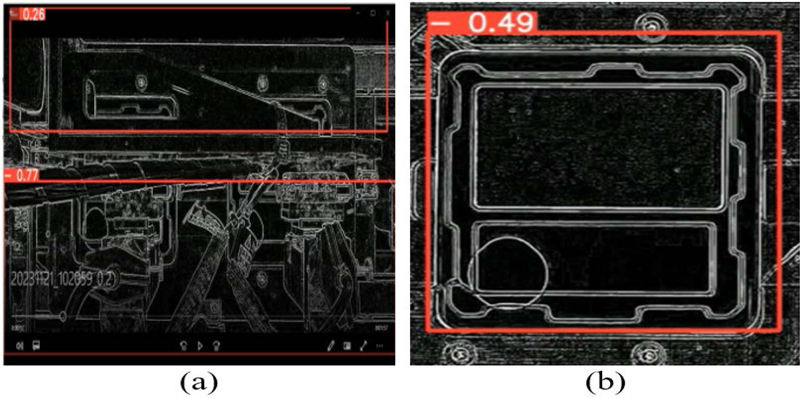

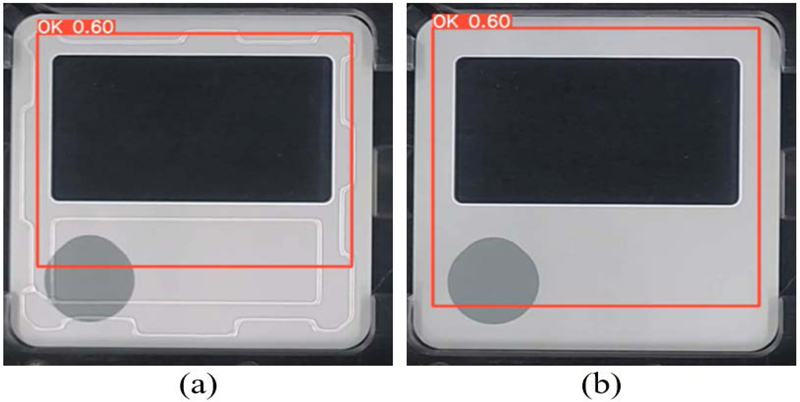

The training method without preprocessing involved direct learning from the original color images with transparent adhesive applied, without any preprocessing or transformation of the input images. This method included various approaches, such as labelling the entire outer region of the image (Fig. 4), removing unnecessary background around the adhesive-applied area using a polygon tool before training (Fig. 5), and focusing training only on specific sections where the adhesive was applied (Fig. 6).

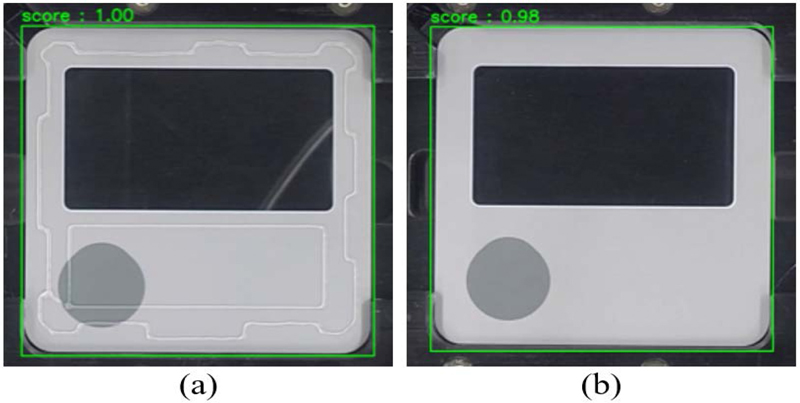

Learning after designating the entire outer area; (a) Sample without defects, (b) Sample with defects.

Learning after deleting unnecessary background images; (a) Sample without defects, (b) Sample with defects.

Learning only partial regions of adhesive application; (a) Sample without defects, (b) Sample with defects.

However, no difference in similarity between samples with and without the application of transparent adhesive, quantified by the IoU metric, were observed. Therefore, the learning method without a preprocessing was unable to detect adhesive application defects using a transformation step, regardless of learning area labeling.

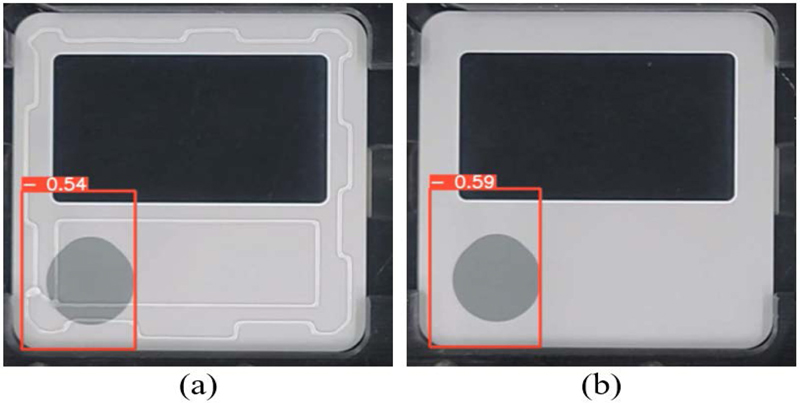

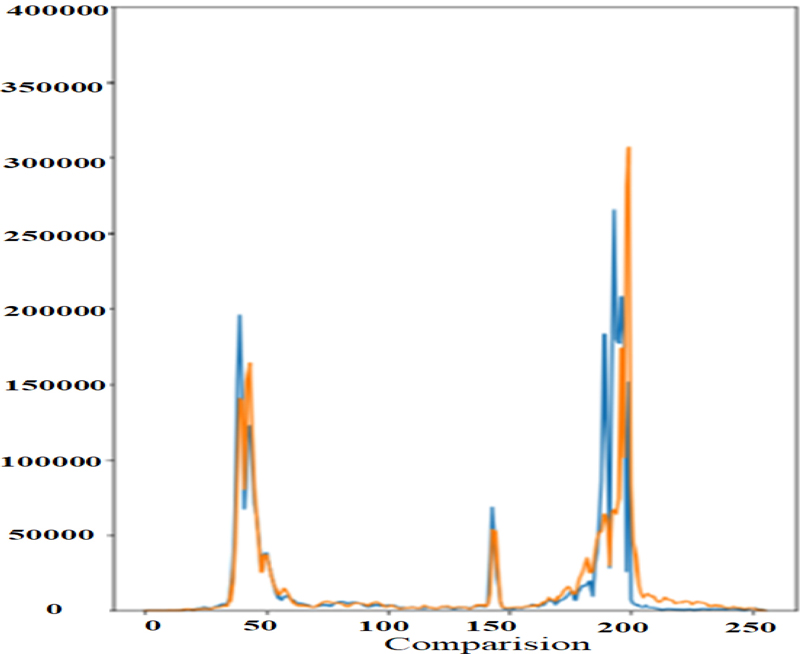

3.4 Template matching and histogram comparison

As discussed in Section 3.3, it was demonstrated that the detection of adhesive application defects is challenging owing to the lack of distinguishing features between the two images when using a learning method without preprocessing. In this Section, we explore the reduction in learning ability due to the transparency of the adhesive via a histogram comparison graph and template matching maximum similarity measurement, which serve as object detection evaluation metrics. Fig. 7 shows a histogram comparison measurement graph of defect-free and defective samples, and Fig. 8 shows the results of a template-matching maximum similarity measurement. No significant difference between the defect-free and defective samples was observed.

Measure similarity between defect-free and defective samples.(Light blue line: Original image, Orange line: Comparison image)

Measuring maximum similarity of template matching; (a) Sample without defects, (b) Sample with defects.

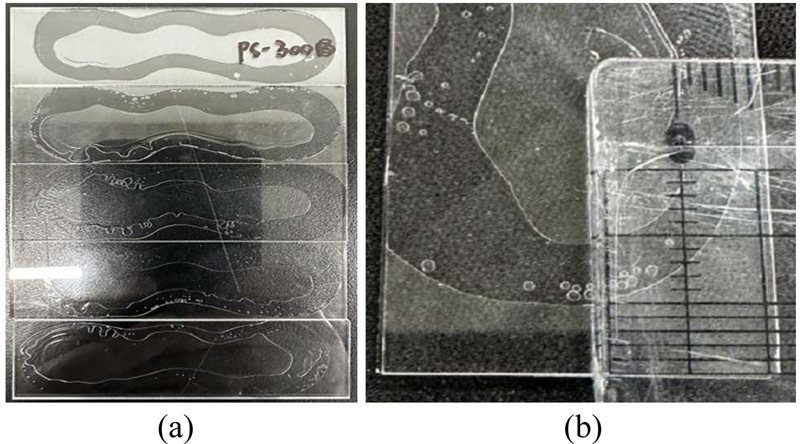

The histogram comparison and template matching showed approximately 98% agreement between the samples with and without adhesive application, indicating that the required level of distinction for defect detection was not achieved. For transparent adhesives, as shown in Fig. 9, an edge operator preprocessing step was introduced in the training to enhance the detection accuracy by focusing on distinguishing the boundary lines.

3.5 Edge Operator Preprocessing Learning Method

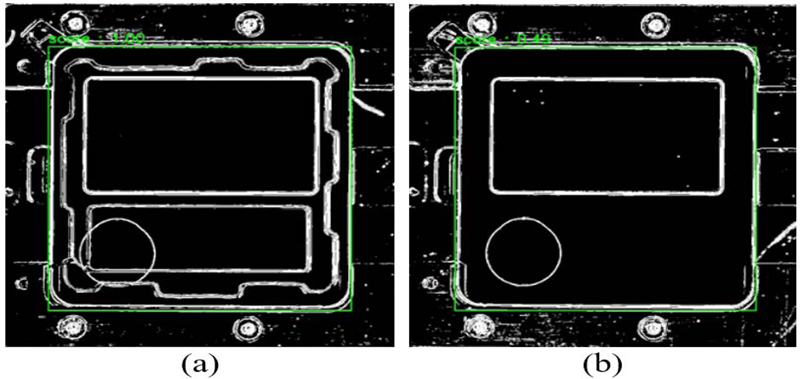

The learning method with a preprocessing step involves the conversion of an image with an adhesive applied in to an edge operator-enhanced version, such as a Laplacian, Sobel, or Canny image, followed by the learning process. In the edge operator conversion step, the threshold value was adjusted to the level that enabled clear identification of the adhesive applied edge boundary.

When training with Laplacian transformed images, the learning performance was reduced because of edge boundary and background image interferences, as shown in Fig. 10 Although adhesive application defect detection was possible with IoU = 49% with respect to the original image with adhesive applied, the accuracy of detection in some sections were low due to performance degradation.

Compared with the Laplacian transformation, the Sobel transformation has a simpler process and faster response time, making it advantageous for application in real-time dispensing equipment. However, in terms of performance, it was observed to be inadequate for defect detection, achieving only 49% similarity with respect to the original image with the adhesive applied.

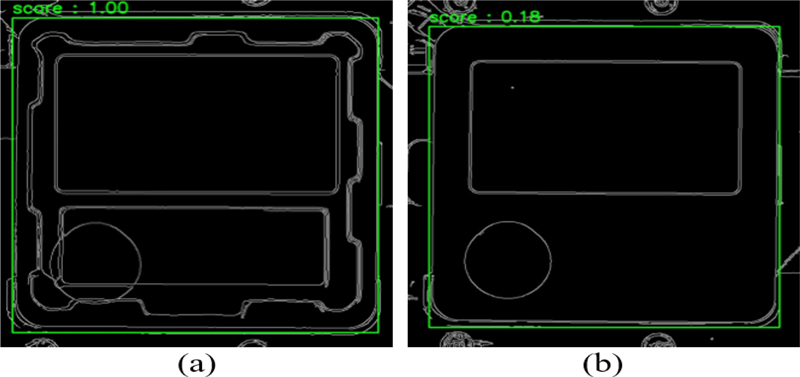

Canny transformed images exhibited more resistance to background noise than that of Laplacian transformed images. Although the response speed slower than that of Sobel transformed images, they demonstrated excellent learning performance.

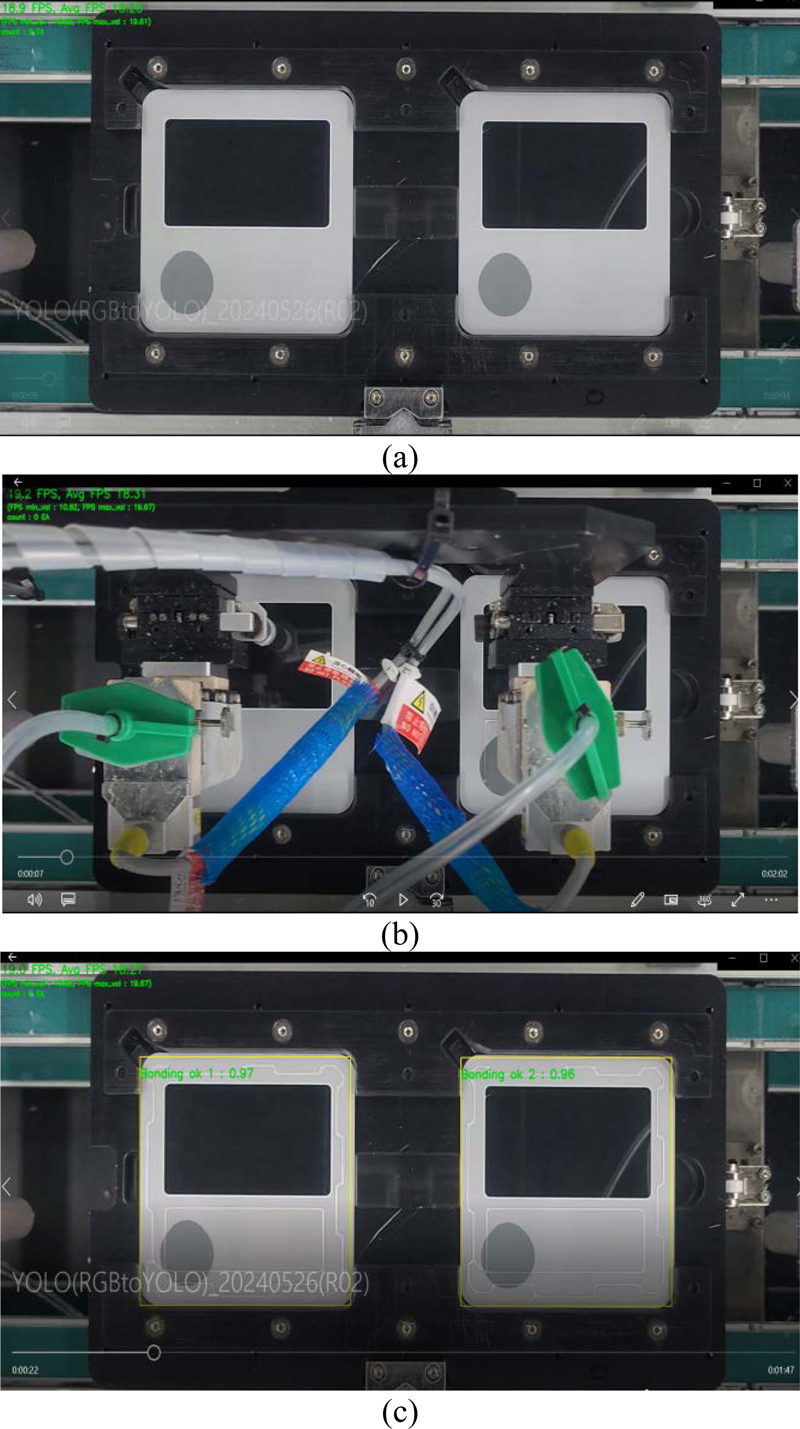

For samples with properly applied adhesive, the similarity measurement yielded IoU = 1.00, and for defective samples to which the adhesive was not applied, the similarity measurement yielded IoU = 0.18. This significant difference enabled effective distinction between defect-free and defective images, aligning with the primary objective of this study: accurate defect detection. Fig. 13 illustrated a real-time detection of the actual object captured by the adhesive application automation equipment. Panel (a) shows the image before adhesive application, panel (b) shows the image during adhesive application, and panel (c) displays the results of object detection and similarity (IoU = 0.98) measurements after adhesive application. Establishing a similarity detection criterion based on the defect type enables the identification of areas where adhesive application is insufficient.

4. CONCLUSIONS

This study investigated the detection of defects in transparent adhesives applications using two approaches: a learning method without preprocessing and one involving edge operator preprocessing. A camera was mounted on an automated adhesive dispensing machine, and an inspection environment was established. In the learning method without preprocessing, the root cause of the degraded learning performance was identified and verified through histogram comparison graphs and template-matching maximum similarity measurements. Owing to performance degradation, detecting transparent adhesive application defects was not feasible employing the learning method without preprocessing. Therefore, this issue was addressed by implementing an edge operator pre-processing method. Learning performance was evaluated by converting the images using edge operator-based techniques, such as Laplacian, Sobel, and Canny transformations. Among these methods, the Canny image transformation exhibited the most effective performance. This experiment identified the shortcomings of conventional learning methods and proposed a solution for improving their performance.

REFERENCES

- K. J. Kim, “Implementation of an Image Based Tire Wear Diagnosis System”, M. S. thesis, Kyungpook National University, Daegu, 2018.

-

J. Y. Lee, “Implementation of Pedestrian Recognition Based on HOG using ROI for Real Time Processing”, J. Inst. Korean Electr. Electron. Eng., Vol. 18, No. 4, pp. 581-585, 2014.

[https://doi.org/10.7471/ikeee.2014.18.4.581]

- S. Y. Jung, S. K. Lee, C. I. Park, S. Y. Cho, and J. H. Yu, “A Method for Detecting Concrete Cracks using Deep-Learning and Image Processing”, J. Archit. Inst. Korea Struct. Constr., Vol. 35, No. 11, pp. 163-170, 2019.

-

Y. M. Kim, Y. G. Kim, S. Y. Son, S. Y. Lim, B. Y. Choi, and D. H. Choi, “Review of Recent Automated Pothole-Detection Methods”, Appl. Sci., Vol. 12, No. 11, p. 5320, 2022.

[https://doi.org/10.3390/app12115320]

- B. S. Kim, Y. W. Kim, and J. H. Yang, “Detection of corrosion on steel plate by using Image Segmentation Method”, J. Korean Inst. Surf. Eng., Vol. 54, No. 2, pp. 84-89, 2021.

-

J. G. Kim, J. H. Kang, K. C. Choi, W. K. Jang, H. J. Ha, K. S. Lim, B. Kim, and Y. J. Park, “Development of a Normal/Abnormal RBC Detection Algorithm using YOLOv5”, J. Korean Soc. Manuf. Technol. Eng., Vol. 31, No. 2, pp. 94-100, 2022.

[https://doi.org/10.7735/ksmte.2022.31.2.94]

-

J. H. Hwang, B. W. Cho, and D. H. Choi, “Feature Map Analysis of Neural Networks for the Application of Vacant Parking Slot Detection”, Appl. Sci., Vol. 13, No. 18, p. 10342, 2023.

[https://doi.org/10.3390/app131810342]

-

Z. Liu, Y. Guo, Z. Feng, and S. Zhang, “Improved Rectangle Template Matching Based Feature Point Matching Algorithm”, Proc. of Chin. Control Decis. Conf., pp. 2275-2280, Nanchang, China, 2019.

[https://doi.org/10.1109/CCDC.2019.8833208]

-

Y. Wen, Y. Jia, Y. Zhang, X. Luo, and H. Wang, “Defect Detection of Adhesive Layer of Thermal Insulation Materials Based on Improved Particle Swarm Optimization of ECT”, Sensors, Vol. 17, No. 11, p. 2440, 2017.

[https://doi.org/10.3390/s17112440]

-

M. Barus, H. Welemane, V. Nassiet, M. Fazzini, and J. C. Batsale, “Boron nitride inclusions within adhesive joints: Optimization omechanical strength and bonded defects detection”, Int. J. Adhes. Adhes., Vol. 98, p. 102531, 2020.

[https://doi.org/10.1016/j.ijadhadh.2019.102531]

- J. H. Hwang, “An Approach to Feature Map Based Model Compression of Deep Learning”, M. S. thesis, Kyungpook National University, Daegu, 2022.

- D. Y. Yun and M. K. Moon, “Development of Kid Height Measurement Application based on Image using Computer Vision”, J. Korea Inst. Electron. Commun. Sci., Vol. 16, No. 1, pp. 117-124, 2021.

-

Y. T. Baek, S. H. Lee, and J. S. Kim, “Intelligent Missing Persons Index System Implementation based on the OpenCV Image Processing and TensorFlow Deep-running Image Processing”, J. Korea Soc. Comput. Inf., Vol. 22, No. 1, pp. 15-21, 2017.

[https://doi.org/10.9708/jksci.2017.22.01.015]

-

Y. T. Baek, S. H. Lee, and J. S. Kim, “Design of OpenCV based Finger Recognition System Using Binary Processing and Histogram Graph”, J. Korea Soc. Comput. Inf., Vol. 21, No. 2, pp. 17-23, 2016.

[https://doi.org/10.9708/jksci.2016.21.2.017]

-

C. Wang, Q. Sun, X. Dong, and J. Chen, “Automotive adhesive defect detection based on improved YOLOv8”, Signal Image Video Process., Vol. 18, pp.2583-2595, 2024.

[https://doi.org/10.1007/s11760-023-02932-1]

-

J. S. Jang and D. H. Choi, “Implementation of an OpenCV based Low-Cost Surface Pressure Analysis System”, Asia-pac. J. Converg. Res. Interchange, Vol. 10, No. 4, pp. 37-49, 2024.

[https://doi.org/10.47116/apjcri.2024.04.04]

- M. A. Ilani, A. Kavei, and A. Radmehr, “Automatic Image Annotation (AIA) of AlmondNet-20 Method for Almond Detection by Improved CNN-based Model”, M. S. thesis, University of Tehran, Tehran, Iran, 2024.

- https://www.restack.io/p/yolov5-answer-image-preprocessing-techniques-cat-ai/, (retrieved on Oct. 25, 2024)